What a mouthful that title is. This post is a WIP.

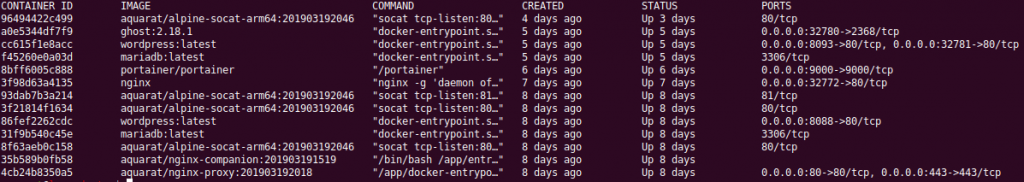

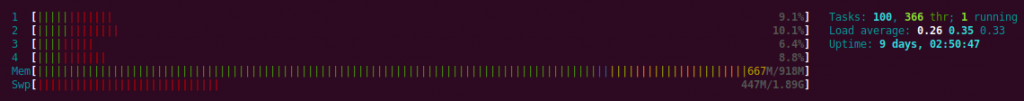

I recently discovered the joys (😉) of running docker containers on armhf and arm64 machines. This is a quick guide, mostly for myself, so I can reproduce the steps to creating dockerhub autobuilding images for multiple architectures.

AKA If you have a project, hosted in a public repository like Github or Bitbucket and your project may be run in a docker container on hosts with different CPU architectures, this is how you can get DockerHub to Autobuild your project.

Start by enabling "experimental" CLI features in your docker client : (add the "experimental" key and value)

cat ~/.docker/config.json

{

"auths": {

"https://index.docker.io/v1/": {}

},

"HttpHeaders": {

"User-Agent": "Docker-Client/17.12.1-ce (linux)"

},

"credsStore": "secretservice",

"experimental": "enabled"

}

and your docker daemon : (and again, add "experimental")

cat /etc/docker/daemon.json

{

"experimental": true,

"runtimes": {

"nvidia": {

"path": "nvidia-container-runtime",

"runtimeArgs": []

}

}

}

Either create a new repository on DockerHub using the web interface or push an existing image to DockerHub (which automatically creates the repository) :

docker push aquarat/volantmq:amd64

In your repository, create the file structure described below and populate them accordingly. The documentation for this structure can be found here.

File structure : (largely lifted from this awesome Github answer)

├── Dockerfile

├── Dockerfile.aarch64

├── Dockerfile.armhf

└── hooks

├── build

├── post_checkout

└── pre_build

hooks/build :

#!/bin/bash

docker build

--file "${DOCKERFILE_PATH}"

--build-arg BUILD_DATE="$(date -u +"%Y-%m-%dT%H:%M:%SZ")"

--build-arg VCS_REF="$(git rev-parse --short HEAD)"

--tag "$IMAGE_NAME"

.

hooks/post_checkout:

#!/bin/bash

BUILD_ARCH=$(echo "${DOCKERFILE_PATH}" | cut -d '.' -f 2)

[ "${BUILD_ARCH}" == "Dockerfile" ] &&

{ echo 'qemu-user-static: Download not required for current arch'; exit 0; }

QEMU_USER_STATIC_ARCH=$([ "${BUILD_ARCH}" == "armhf" ] && echo "${BUILD_ARCH::-2}" || echo "${BUILD_ARCH}")

QEMU_USER_STATIC_DOWNLOAD_URL="https://github.com/multiarch/qemu-user-static/releases/download"

QEMU_USER_STATIC_LATEST_TAG=$(curl -s https://api.github.com/repos/multiarch/qemu-user-static/tags

| grep 'name.*v[0-9]'

| head -n 1

| cut -d '"' -f 4)

curl -SL "${QEMU_USER_STATIC_DOWNLOAD_URL}/${QEMU_USER_STATIC_LATEST_TAG}/x86_64_qemu-${QEMU_USER_STATIC_ARCH}-static.tar.gz"

| tar xzv

hooks/pre_build:

#!/bin/bash

BUILD_ARCH=$(echo "${DOCKERFILE_PATH}" | cut -d '.' -f 2)

[ "${BUILD_ARCH}" == "Dockerfile" ] &&

{ echo 'qemu-user-static: Registration not required for current arch'; exit 0; }

docker run --rm --privileged multiarch/qemu-user-static:register --reset

Dockerfile -> Your standard amd64 Dockerfile.

An example of the start of this would be VolantMQ’s Dockerfile :

cat Dockerfile.armhf

FROM golang:1.11.1 as builder

LABEL stage=intermediate

and now Dockerfile.armhf, our armhf build :

cat Dockerfile.armhf

FROM golang:1.11.1 as builder

LABEL stage=intermediate

COPY qemu-arm-static /usr/bin/

"qemu-arm-static" is a binary executable that acts as an emulator for armhf executables. It is downloaded by the pre_build script, which is called by DockerHub during the autobuild.

Dockerfile.aarch64:

cat Dockerfile.aarch64

FROM golang:1.11.1 as builder

LABEL stage=intermediate

COPY qemu-aarch64-static /usr/bin/

In order to allow the docker container to use this emulator you’ll need to register it as a binary executable handler (this tells the kernel how to deal with specific files). This should be covered by pre_build, but in case it isn’t: In Ubuntu install qemu-user-static :

qemu-user-static

or execute a docker image :

docker run --rm --privileged vicamo/binfmt-qemu:latest

Once you’ve got this done, you can test your builds locally, like so :

DOCKERFILE_PATH=Dockerfile.aarch64 IMAGE_NAME=aquarat/volantmq:latest-aarch64 bash -c "hooks/post_checkout && hooks/build"

DOCKERFILE_PATH=Dockerfile.armhf IMAGE_NAME=aquarat/volantmq:latest-arm bash -c "hooks/post_checkout && hooks/build"

DOCKERFILE_PATH=Dockerfile IMAGE_NAME=aquarat/volantmq:latest-amd64 bash -c "hooks/post_checkout && hooks/build"

If that works, you can get pave the way for the dockerhub manifest by pushing your newly-created images to dockerhub:

docker push aquarat/volantmq:latest-amd64

docker push aquarat/volantmq:latest-arm64

docker push aquarat/volantmq:latest-arm

You may need to log your docker client in : docker login

You should then commit your changes to your repository and push.

You’ll need to annotate your manifest images :

# Create a manifest that describes your DockerHub repository

# This takes the form of the multi-arch "virtual" image and then its constituent images.

docker manifest create aquarat/volantmq:latest aquarat/volantmq:aarch64 aquarat/volantmq:armhf aquarat/volantmq:amd64

# Tag each non-amd64 image apropriately

docker manifest annotate aquarat/volantmq:latest aquarat/volantmq:armhf --os linux --arch arm

docker manifest annotate aquarat/volantmq:latest aquarat/volantmq:aarch64 --os linux --arch arm64 --variant armv8

# and then push your changes to DockerHub

docker manifest push aquarat/volantmq

# and then to inspect the result :

docker run --rm mplatform/mquery aquarat/volantmq

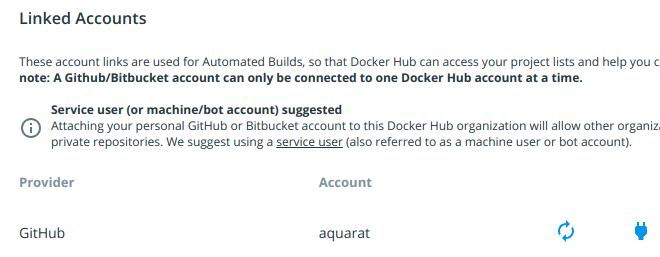

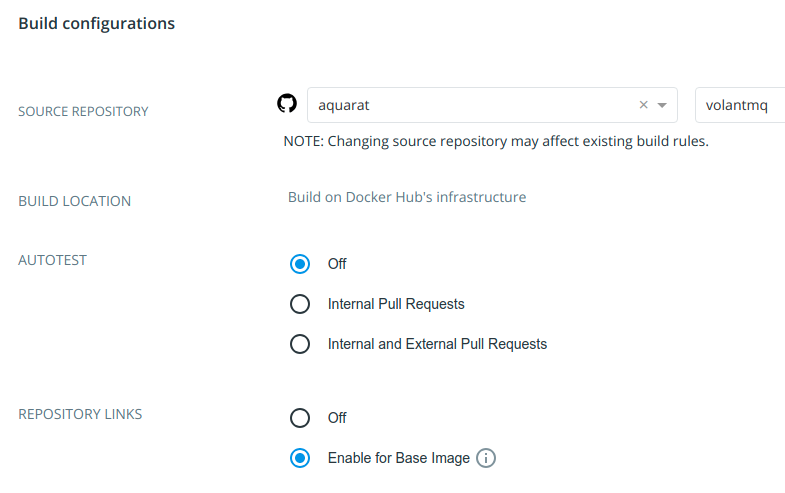

Connect your dockerhub account to your Bitbucket/Github account. This can be found in your dockerhub profile page : https://cloud.docker.com/u/somecoolnick/settings

Go back to your repository, click the “Builds” tab and click “Configure Automated Builds”.

Set up the source repository.

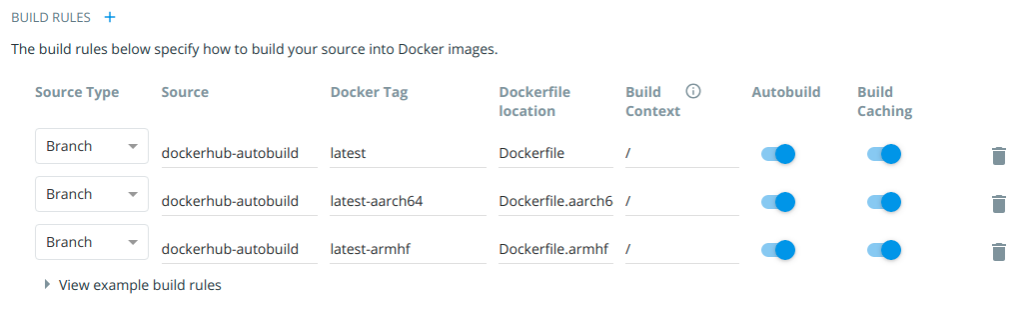

and then set up some build rules :

Click “Save and Build” and watch what happens. It takes a while to build.